At PION, we recently created a new Developer Experience (DevEx) team which has the primary goal of making the work life of our developers better. The main way we’re going to be doing this is by improving our tooling and development processes.

One of the first initiatives we decided to take, is improving the speed and reliability of our GitLab CI pipelines. With some great support from the platform team 🙌, below are a few of the things we changed and the improvements that came from them.

If you’re interested in the technical side of how we implemented the changes detailed below, you can find an example repository here which contains a sample GitLab configuration and CI pipeline that I used to test the changes.

Why?

The main reason for speeding up our CI/CD pipelines is to save developer time and toil.

Considering developer time is also one of our most expensive resources, allowing our devs to save time and make changes quicker will be of great benefit to the company. Additionally, if pipeline durations are reduced to within a couple of minutes (or seconds), developers will not need to start working on another task, reducing the mental fatigue associated with context switching and lowering the chance of burnout.

Since faster pipelines also lead to faster deployments, there are several other knock-on effects, such as:

- Faster product delivery and outer feedback loops - we can get product changes out for user testing quicker.

- Faster incident recovery - if a breaking change makes it past testing (no test suite is perfect 😅), developers can put a fix out quicker, reducing the impact on users and potential lost revenue.

What We Changed

Here are the five key changes we made to speed up our GitLab CI pipelines, along with an example of how they could be implemented:

- Running Jobs in Kubernetes

- Adding Distributed Caching

- Container Image Layer Caching

- Parallelizing Large Jobs

- Taking Advantage of the

needsKeyword

Running Jobs in Kubernetes

Where the pipeline jobs run can make a massive difference to how quickly jobs start and the resources available to them.

GitLab uses the GitLab Runner application to run jobs in a pipeline. The environment the job is run in is defined by the runner’s executor, of which there are many choices.

The original executor setup we were using was docker+machine which in our case spun up a new AWS EC2 instance for each job, autoscaling based on demand. While the docker+machine executor provided great isolation and resource control for jobs, with the intermittent demand of CI pipelines and the runner's auto-scaling not being perfect, spinning up a new EC2 instance per job on average took ~3mins.

Long spin-up times leads to pipelines with a large number of jobs being laden with a lot of idle time… not ideal when developers are waiting for critical deployments.

The solution we came up with to alleviate the ~3min delay per job issue was to move our jobs to run inside a Kubernetes cluster using the GitLab Runner Kubernetes executor.

Moving jobs to run in Kubernetes came with several advantages, two of which were:

- Less waiting time - The Kubernetes nodes are already running, so there is little waiting time to start a pod compared to spinning up a new AWS EC2 instance.

- Better resource optimization - CI jobs have a wide range of resource requirements, running in Kubernetes allows for a job to define its resource requests and limits, allowing for easier, more refined resource optimization compared to creating different runners for different AWS EC2 instance sizes.

Adding Distributed Caching

One of the most effective ways to reduce pipeline duration is to reduce the amount of time spent downloading and building application dependencies. Caching can be used to store dependencies built in a previous pipeline run.

When CI jobs are running in containers (like when using the Kubernetes executor) local storage is ephemeral so any build dependencies created in a job will be lost on completion and therefore not be available to subsequent jobs.

A common way to allow dependencies to be persistently stored and shared between jobs is by creating a distributed cache. This is where a compatible cloud storage service, such as AWS S3, is used to store the cached files which are then uploaded and downloaded throughout the job’s lifecycle.

We decided to go for AWS S3 for our runner cache due to its high availability, and ease of setup and maintenance.

Container Image Layer Caching

If you run your application in containers, it’s likely you’ll have to rebuild your container images on each deployment pipeline run.

When building container images locally, tools such as Docker cache container image layers, preventing the need to rebuild identical layers. The layer cache can save considerable time, as when parts of the application don’t change, such as the dependencies, the image layers can be re-used instead of rebuilt.

As mentioned in the above section, when CI jobs run in containers, local storage is ephemeral, so any layer caches will be lost on job completion.

To take advantage of layer caching in pipelines, the image layers must first be pulled from an external cache, such as where you push the images to for deployment e.g. DockerHub or AWS ECR. For example, when using Docker to build images:

docker pull <image name>:latest

docker build --cache-from <image name>:latest -t myimage:latest .

Building Images with Cache in Kubernetes

As CI jobs using the Kubernetes executor run in containers, using docker to build images in Kubernetes is classed as using Docker in Docker (DinD). Using DinD can lead to multiple issues, particularly in regard to security since most DinD setups require using the --privileged option on the parent container, so it tends to be best to avoid it if possible.

Another tool that can be used to build container images is Kaniko, which is also recommended by GitLab. Kaniko uses a custom container image to build from a Dockerfile and source code and also comes with a bunch of other useful features, such as remote image layer caching.

GitLab has a great guide on how to build images in CI jobs using the Kaniko debug container image. To add image layer caching to their example, add the --cache=true and --cache-repo <cache repo> option to the /kaniko/executor command. An example of this can be seen in the example repository here.

Another cool feature of Kaniko is layer cache expiry. Using the --cache-ttl <duration> option, cached layers will expire after a set period. Layer expiry allows all steps of the Dockerfile to be run periodically, so RUN commands which update the image will not be cached forever, keeping your image up to date. The cache expiry can be useful, particularly if you implement scheduled pipelines to rebuild images on older applications that are not in active development.

Optimise Dockerfile for Layer Caching

To take full advantage of layer caching, the Dockerfile being built from will need to be optimised for caching. There are plenty of articles out there on how to do this for a specific language or framework, e.g. this one from Florin Lipan, but two key things to do are:

Firstly, follow the Dockerfile best practices.

Secondly, copy and build more stable parts of the application, such as dependencies, before the rest of the application. For example, when building a Ruby application that uses Bundler, the Gemfile & Gemfile.lock can be copied first to allow for the layers to be cached if the Gems are not changed in subsequent builds:

FROM ruby:3.1

# …

COPY Gemfile Gemfile.lock ./

RUN bundle install

COPY ./

# …

The container layers will cache if the hash of the files copied does not change. If you only have one COPY command that copies in all the files with COPY . ./, on the next pipeline run, it is expected some files will have changed (as that's a common reason for a deployment pipeline to run), this change in files busts the cache for all future steps, causing gems to be rebuilt.

Parallelizing Large Jobs

If you can't avoid running a task via caching or other means, then another way to speed up the task's overall execution is through parallelization.

In CI, parallelization is where a task is split up across multiple machines (or pods) and its execution is optimised for: overall runtime, amount of computing resources, and test overhead (those unavoidable setup costs per job such as downloading the source code). It’s commonly used for large test suites.

Luckily GitLab has parallel jobs built into its CI system which can be enabled by using the parallel keyword.

GitLab has a good guide on how to set up parallel jobs. An example would be splitting JavaScript Jest tests using the --shard option:

test:

parallel: 5

script:

- yarn jest --shard "$CI_NODE_INDEX/$CI_NODE_TOTAL" --maxWorkers=1

Taking Advantage of the needs Keyword

When running pipelines with lots of dependent jobs, using the needs keyword instead of the dependencies keyword can lead to improvements in overall pipeline duration.

The needs keyword allows jobs to be executed as soon as their dependents have completed, out of stage order. Not waiting for all the jobs in a stage to complete can be useful in larger applications, such as those with multiple build stages.

For example, in a full-stack application that builds and tests both the frontend UI and the backend API, it's likely that each of the unit test jobs will not require the entirety of the application’s dependencies to be built. Therefore, if the UI dependencies are built before the API’s, the UI's test jobs can start sooner, out of stage order, reducing the overall pipeline run time if the UI specs are very long.

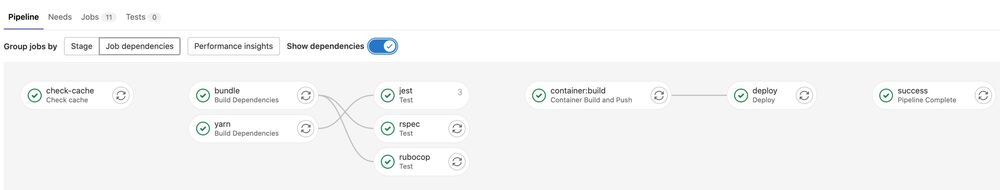

When optimizing a GitLab CI pipeline using needs, GitLab generates a Directed Acyclic Graph (DAG) to define the job order and aid visualization. For example, the sample project’s DAG looks like this:

Other Refactors

Here are some of the other refactors we did along the way which aren’t strictly speed-related.

Shared CI Config

When you’re working with 100+ projects, including a shared configuration file can make global changes considerably easier and quicker.

By using the include keyword in GitLab CI, steps and stages from other configuration files located outside of the local repository can be merged into a project’s pipeline configuration.

For example, throughout our projects, we extracted common CI steps for building, scanning, and pushing container images, into a global shared CI configuration file, e.g. shared.gitlab-ci.yml.

Projects can then include the global CI configuration and use the extends keyword to merge any required shared configurations into their jobs, e.g. .gitlab-ci.yml.

The shared configuration gives a standardised process for container creation and when changes need to be made there is only one place to update.

Cancelling Redundant Pipelines

When there are a lot of commits being made, cancelling outdated pipelines saves considerable compute. To take advantage of this feature, we have configured our projects to cancel all but some specific deployment pipelines which cannot fail.

While on some platforms cancelling redundant pipelines can be set up with a single toggle, e.g. CircleCI, in GitLab the setting has to be enabled per project and the CI configuration needs to explicitly specify which jobs require cancelling using the interruptible: true keyword.

Results

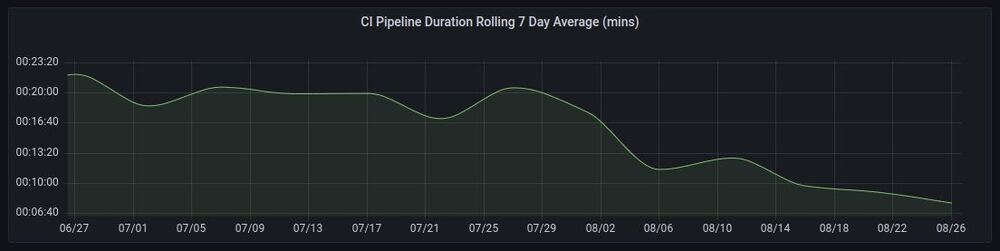

Over the 90+ pipelines worked on, there was an average 70% decrease in runtime, with the main gains being pipelines with lots of dependency builds (improved through general caching and layer caching) and a high number of steps (improved by moving to Kubernetes).

Overall we saved over 350 hours / month of pipeline run time, which will go a long way in improving our developer productivity and reducing frustration.

Future Improvements

There are still a few improvements that we might make in the future to the pipeline configuration and infrastructure. Some of the notable ones are:

- Create a local pull-through image cache using Docker registry (or another cache application) - This will speed up image downloads and reduce internet traffic.

- Create a local distributed cache using MinIO instead of AWS S3. This will provide improved cache speed and no ingress/egress fees from S3, however, it is harder to maintain and less available.

- Parent-child pipelines. This will allow us to split the larger pipelines into independent more manageable sections.

Conclusion

Overall by changing a few key aspects of where and how our CI/CD pipelines run and adding distributed caching, we’ve managed to decrease our average pipeline duration by 70% 😄

While the ~350 hours saved doesn’t exactly correlate to developer time (since they will likely be working on other things while waiting for long pipelines) it will certainly help on the shorter pipelines, such as merge request tests, where they will no longer need to context switch to work on other things since pipelines will only take a few seconds to minutes.

Along with saving the developers time and making their experience better, the decrease in pipeline duration has greatly improved our merge to deployment time, which will help with incident recovery and the outer development feedback loop.